Lesson evaluation

Abstract

By making time and intentionally designing a regular (end of) lesson evaluation, teachers can get the pulse of the cohort, how their learning is progressing and what they should address next. This regular evaluative feedback can inform more inclusive learning design tailored to the needs of the cohort. This chapter is about the why, what and how of inclusive lesson evaluation.

About the case studies

There are two case studies:

1.In the printed/ebook edition, a narrative by Flower Darby (USA) entitled ‘Inclusive lesson closures to evaluate learning’ with many practical ideas about how to gain evaluative feedback from students.

2. Here below, a case study by Xinli Wang (Canada) about ‘End of lesson student evaluative feedback’ which focusses on three specific ways to gather lesson evaluations from students, both during the lesson and at the end.

End of lesson student evaluative feedback

By Xinli Wang (Canada)

There is so much we can learn from our students. Student feedback of teaching is an important tool for course instructors to improve their teaching and students’ learning experience (Cohen 1980). In most colleges and universities, students are asked to provide their course feedback for the instructors at the end of the semester. There is a lot of value in standardised course evaluations (Costin et al 1973). However, waiting till the end of the semester is not enough because it’s only going to help instructors the next time when they teach the course. If instructors want to get student feedback and improve their teaching right there and then, they will have to start early. It could be a formal, anonymous survey early on, or talking to students after class, or regular lunches with students without causing feedback fatigue for them. When we ask our students to share their feedback, we naturally become more aware of our own teaching practice and notice aspects that we may otherwise overlook.

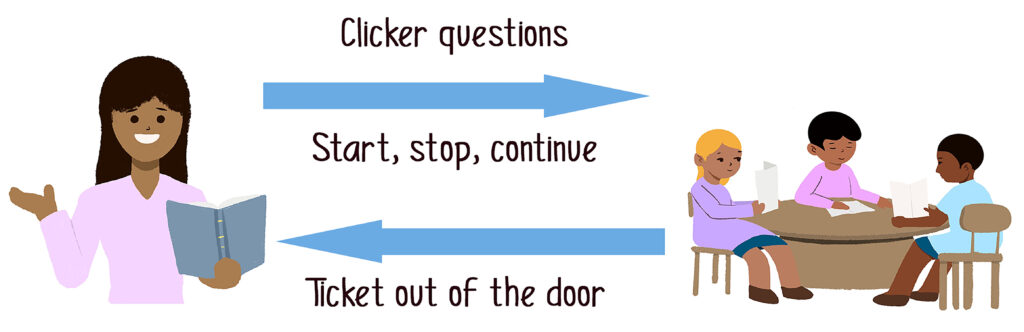

I’ve taught college and university level mathematics for about 10 years now and have tried to make my classroom inclusive by using student feedback in a few different ways. In the classroom, I try to engage the underserved student groups and marginalized groups by using: (1) clicker questions, (2) Start-Stop-Continue (Newton 2015), and (3) Ticket Out of the Door (Marks 1988), as shown in the image below I will briefly discuss these different types of eliciting student feedback and evaluation.

Image: Three ways to trigger evaluative feedback dialogue with students

Clicker questions

Classroom Response System, sometimes called clickers, uses interactive technology that enables instructors to pose questions to students and immediately collect and view the responses of the entire class. After students’ responses have been collected, instructors will address any doubt that arises or clarify a key concept. Typically, in a one-hour lecture, I use 3-4 clicker questions that focus on conceptual understanding. These questions are made available on Mentimeter (an interactive presentation software) and students can key in a pre-set code to access them. Results of these questions are available right after students cast their vote so I can adjust my lecture accordingly. Instead of asking the class “Are there any questions”, this method turns out to be very effective for me to know where my students are in real time. If you are interested in trying it out in your classroom, I highly recommend the Instructors’ Guide developed by CU Science Education Initiative and the UBC Carl Wieman SEI (2009).

Start, Stop, Continue

I implemented “Start, Stop, Continue” with two first year math courses. One class has 66 students, and the other one has 146 students. Due to the difference in size, the activity was administered differently: In the smaller class, students attending a lecture in week 5 (they had their first assessment in week 4) were each given a piece of index card and asked to answer the following 3 questions anonymously:

1.What am I doing in our class that isn’t working? (Something I should STOP doing)

2.What should I put in place to improve your learning experience? (Something I should START doing)

3.What is working well? (Something I should continue doing)

For the large class, I created a survey using Microsoft Forms that contains the same 3 questions, and shared the link with the class via QR Code during my lecture. The link was also sent to them via email ahead of time. I set aside 5 minutes in my lecture to allow them to fill up the form and most of them submitted their responses in class.

This activity promotes openness in the classroom which helps with both students’ learning experience and the instructor’s teaching. When students saw the changes in the way the lectures were delivered, following their feedback, they became more willing to share their opinions with the instructor in general. Students were more engaged after realizing their voice was heard. This activity works well for both small and large classes: it’s quick to implement and the instructor can get a sense of what works/doesn’t work right away.

Ticket out of the door

Ticket out of the Door activities usually happen right before the lectures end: students are asked to write down their “muddiest points” on a piece of paper anonymously before they leave the classroom. I make sure their questions are addressed in the first 5-10 minutes of the next lecture. This activity gives students the opportunity to synthesize and reflect on what they have learned without the pressure of sharing what they don’t know with the whole group. This can also be done online: you can either allow students to type the muddiest point in chat by the end of a lecture/Q&A session, or write on a shared Whiteboard/document depending on what technology tools you are most comfortable with. Personally, I have a shared Excel worksheet with the whole class since the first day of the semester and we are able to use it to record students’ questions and comments, including the muddiest point on a weekly basis.

These evaluative feedback methods are inclusive as students can safely share their thoughts, ideas, and concerns without being asked to speak up in front of the whole class. The data and student feedback obtained was incorporated in my and my colleagues’ teaching practices afterwards, to make teaching better for the students.

References

Cohen, P.A. (1980). Effectiveness of student-rating feedback for improving college instruction: A meta-analysis of findings. Res High Educ 13, 321–341. https://doi.org/10.1007/BF00976252

Costin, F., Greenough W.T. and Menges, R.J. (1973). Student Ratings of College Teaching: Reliability, Validity, and Usefulness, The Journal of Economic Education, 5:1, 51-53. doi: 10.1080/00220485.1973.10845382

Marks, M.C. (1988). Professional Practice: A Ticket Out the Door. Strategies, 17-27. doi: 10.1080/08924562.1988.10591654

Newton, A.H. (2015). Use of the ‘Stop, Start, Continue’ method is associated with the production of constructive qualitative feedback by students in higher education. Assessment & Evaluation in Higher Education, 755-767. http://dx.doi.org/10.1080/02602938.2014.956282

CU Science Education Initiative and the UBC Carl Wieman SEI (2009). An Instructor’s Guide to the Effective Use of Personal Response Systems (Clickers) in Teaching. Available at: https://cwsei.ubc.ca/sites/default/files/cwsei/resources/instructor/Clicker_guide_CWSEI_CU-SEI.pdf

Inclusivity note

Having a regular, simple lesson evaluation mechanism in place makes students feel heard and that their opinion matters. Having an open question such as: ‘how did you find the lesson?’ may seem inclusive, but it might take a while for students to think about meaningful evaluative feedback. Providing simple prompts as suggested in the case study might help start them off.

For students to feel safe to provide genuine feedback, the methods should be low-stake and, importantly, anonymous.